The Amazonian Netprobes

ITRS Group presents ‘The Amazonian Netprobes’, the first of the three part ITRS Cloud Series Blog. In this series our ITRS experts share how we can help you manage and monitor your cloud environment(s) and your environment on premises.

You may have heard of Amazon Web Services (AWS) from either working in the financial sector or from binge watching your favorite series on Netflix or Amazon Prime. AWS is utilized by popular commercial services such as Lyft, Slack, Airbnb, Spotify and Pinterest. Even some government and educational services host their websites and cloud computing with AWS. In this blog, we are going to explore the capabilities of Geneos within the AWS space – highlighting the key advantages and features of our software for when it comes to scaling and monitoring your environment. For many years, Geneos has been primarily used for local (or on-prem) environments, this blog article will show how it can be easily used for a hybrid AWS environment that consist of local and cloud servers. We will go over some netprobe and gateway features that can benefit any cloud engineer or operator that has to maintain this type of environment.

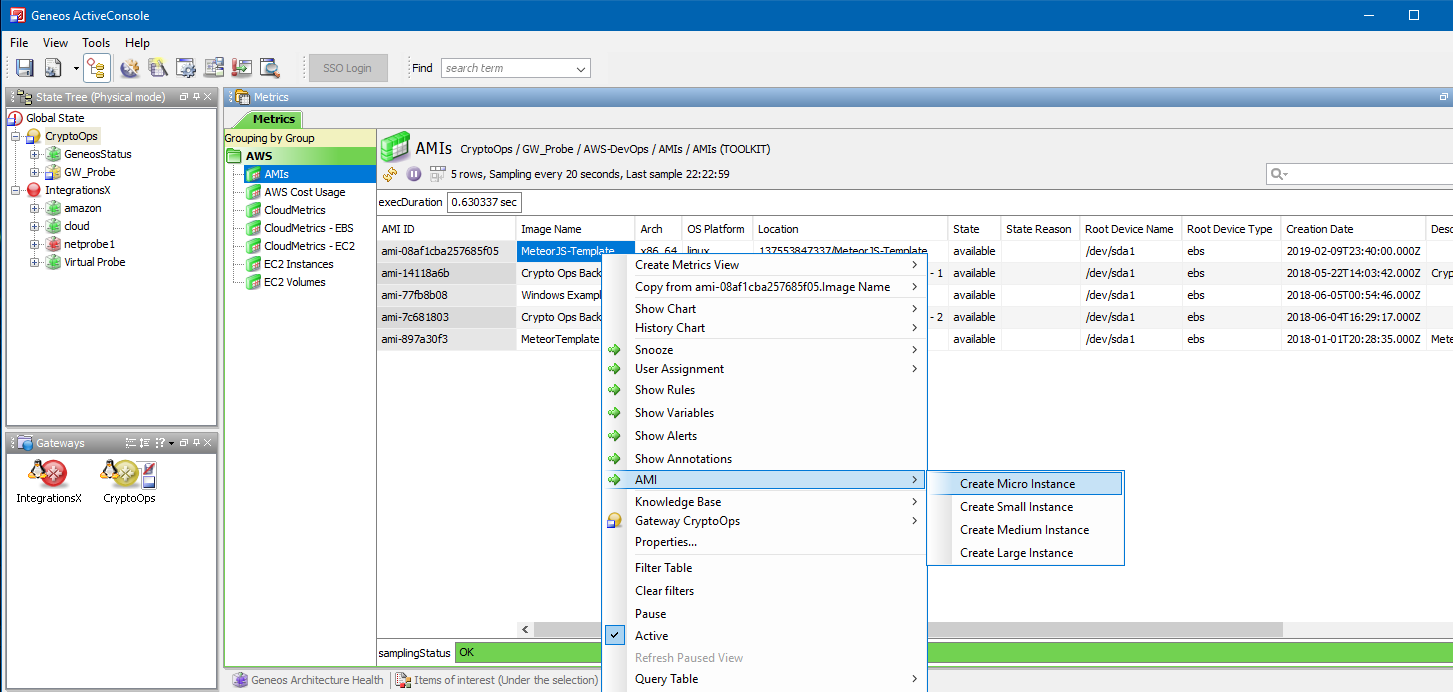

As some Geneos users may already be aware, the Self-Announcing feature allows a pre-configured netprobe to make a reverse connection to a Gateway and provide real time monitoring. Yet one may not be aware of the benefits behind the combination of Geneos and a cloud service integration can provide. In the example below I have a view consisting of Amazon Machine Images (AMIs), where each has a startup script that initiates a self-announcing netprobe to connect to a designated Gateway.

This command will create and initiate EC2 servers with a self-announcing netprobe that is already configured. Once these servers are up, the netprobe hosted on each instance will automatically connect to our Gateway. Although utilizing this feature is expected when it comes to cloud, virtual machines or container-based services, our integration with AWS allows scaling to be more interactive - giving you the advantage of automating tasks and measuring a business application efficiently to achieve cost savings.

So, let’s pretend the author of this blog pressed this command too many times from drinking too much coffee (or from shear excitement), we can switch to our EC2 Instance view to stop and shutdown these extra instances and doing so will release resource and save costs.

This example shows how Geneos can assist you in scaling up or down your AWS environment. You may have also noticed how some of the probes immediately disappeared from our view, this is a result of the Gateway being configured to release and remove the netprobe once it reaches a timed-out connection of more than 3 seconds. This rough time span gives the Gateway an opportunity to fire an alert, but due to a caffeinated human error, we are going to ignore these messages for now.

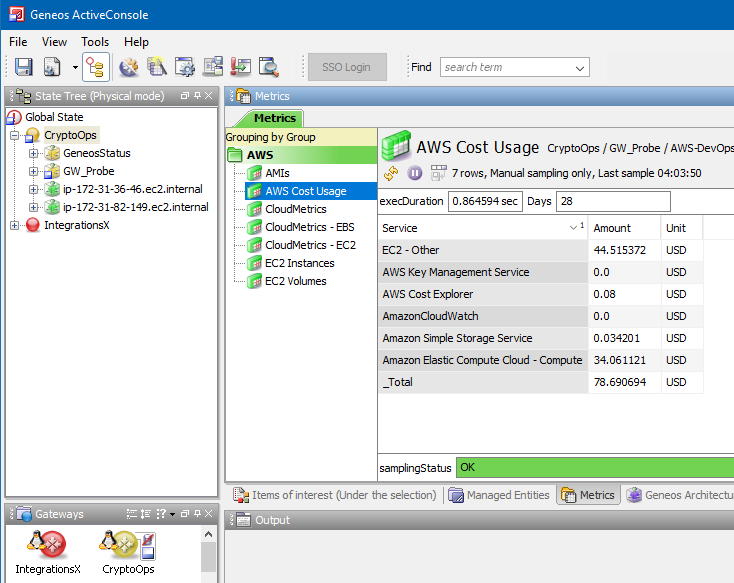

With the AWS integration, you can maintain and balance your EC2 environment through a rule or by a right-click from your mouse. After some time has passed, I’m now concerned the project has consumed too much of my department’s budget funds. To ease my mind, I can switch to my Cost Usage dataview to examine how much was spent. Each cost is divided up by their services, which makes it easier for an operator to spot their largest cost. With this data you can apply rules where a group can be alerted by a fired action once the budget threshold has been reached.

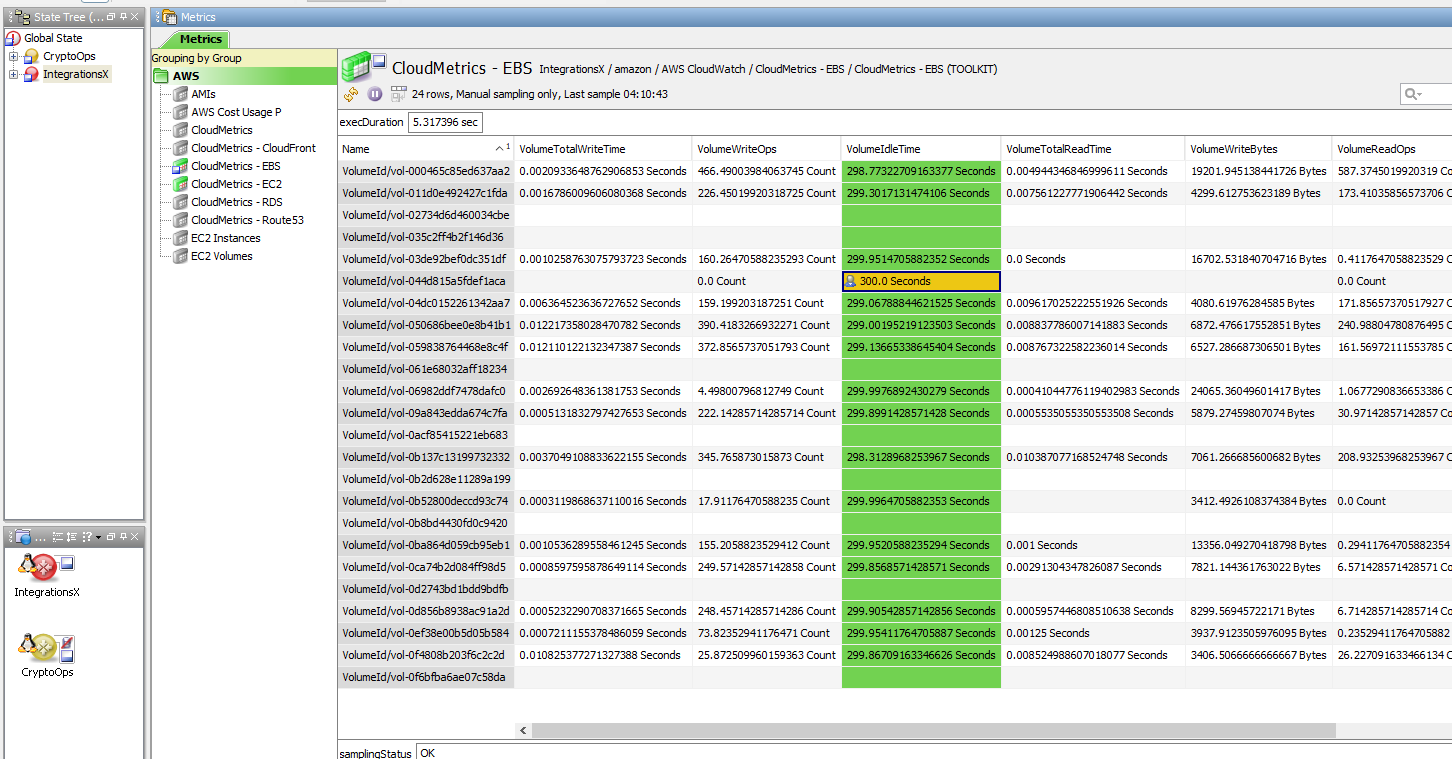

A Geneos environment in the AWS space is a valuable asset for managing, budgeting and scaling your business. Yet monitoring and measuring application performance is its key strong point. For AWS, CloudWatch is used for monitoring your resources and the applications you run within the space. Within our AWS integration, a sampler lists your account’s available CloudWatch services and the services’ metric names. And from this view of listed services another CloudWatch sampler configured to a particular service can be set up.

By taking advantage of the rules engine, we can create actionable alerts that can help determine our applications performance and warn us when things go wrong. This view tells us about the metrics within our Elastic Block Storages (EBS), which are persistent volumes running within our live EC2 instances. Here, we’re concerned about any volume that has a high idle time, luckily this drive is from a instance that is in the process of a shutdown, so I’ll snooze it for now.

With the AWS integration, we are able to control an entire environment with servers that are based in the AWS space and servers that are running locally. This article covered the expected features of using Geneos with a cloud environment, in the next series of blogs, we will go over other features that we can take advantage with AWS.