How to save on cloud costs with workload management – Part 2: Right-sizing and right-buying

In the first part of this blog series, we told you how you can address the first thing every cloud consultant will recommend you do: switch off machines when they don’t need to be running. We have also described how ITRS’s Cloud Cost Optimisation tooling can be used to identify these time periods.

Once you’ve switched off the machines that don’t need to be running, you then need to make sure that the machines that are running are optimally configured and you want to do that before you invest in any reservations or savings plans.

If you commit to reservations for three years before right-sizing, there’s a good chance you’ll commit to spending more than you need to – and when you do get round to right-sizing, it won’t make any difference to your bill.

Measure, plan, adapt and save

If your applications have been running in the cloud for some time, they’re almost certainly over provisioned and you are almost certainly paying too much for them.

You will likely be familiar with the DevOps lifecycle shown below.

Most organisations do a pretty good job at the 'develop', 'build' and 'test' parts, as well as the automation of releases and operating the application in a production environment. But they tend not to do such a good job at the final stage, measure and plan.

When deploying a new application, or migrating a non-cloud application, almost everyone will naturally over provision to ensure there are no early capacity issues. They’ll almost certainly have bought machines larger than they need to be and once the application has bedded in, settled into production and is running with no significant issues, there’ll be no appetite to make changes.

DevOps should be continuous. You should constantly be measuring the performance and capacity of an application, and, in the cloud, you should be considering the costs of running that application.

Are those instances the appropriate size? Can you measure the utilisation of those instances or machines, feed that back to develop and test and recommend new configurations or changes that could reduce the running costs of the application with no impact on performance? This can’t be a one-off exercise.

Right-size

There are many approaches that can be taken with right-sizing. Most tools will use average utilisation over a configurable time period. As I mentioned in part one, averages aren’t sensitive enough to find those regular but required short term peaks. So, at ITRS, we recommend using much more sophisticated, longer-term analytics. This allows you to understand the demand profile of a workload and clearly demonstrate the options available.

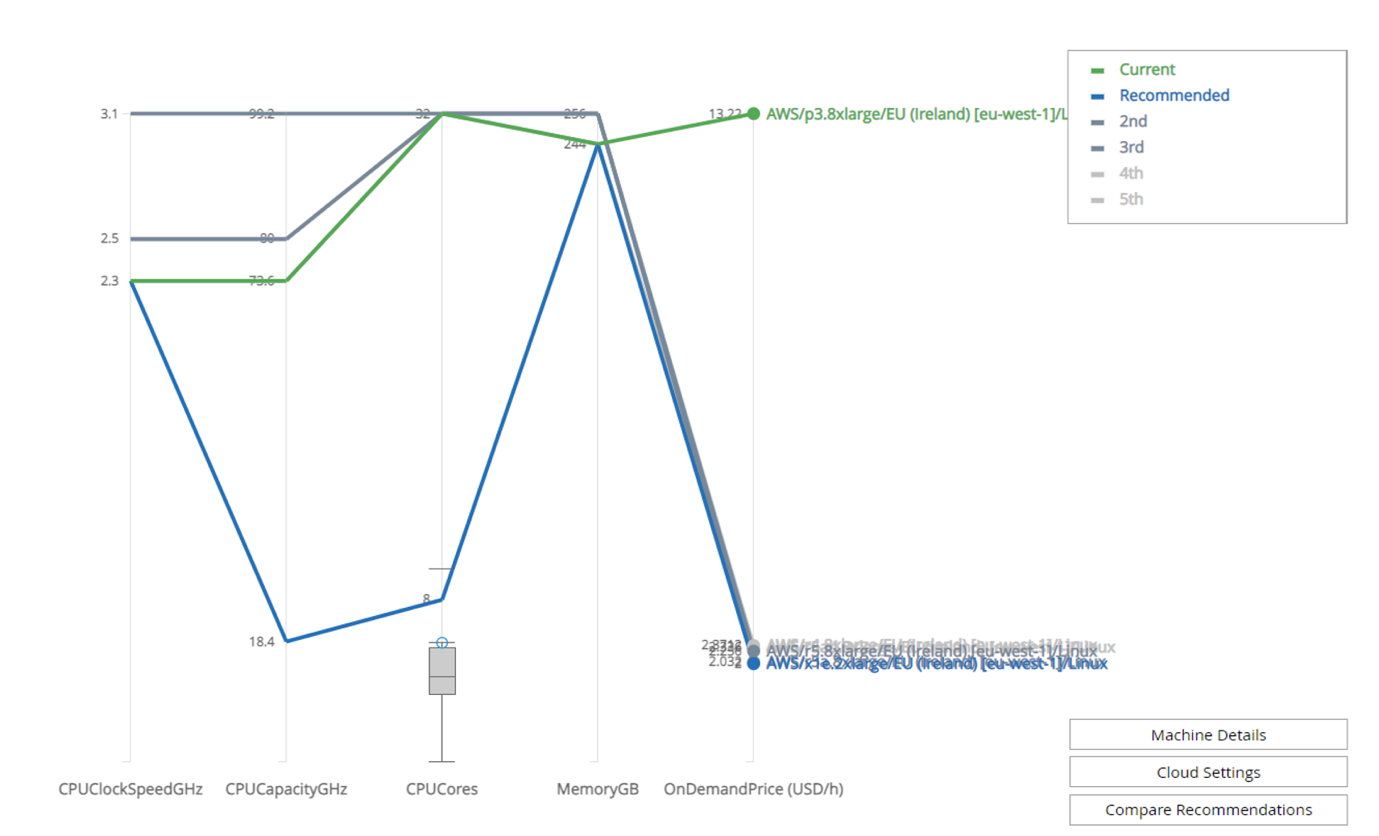

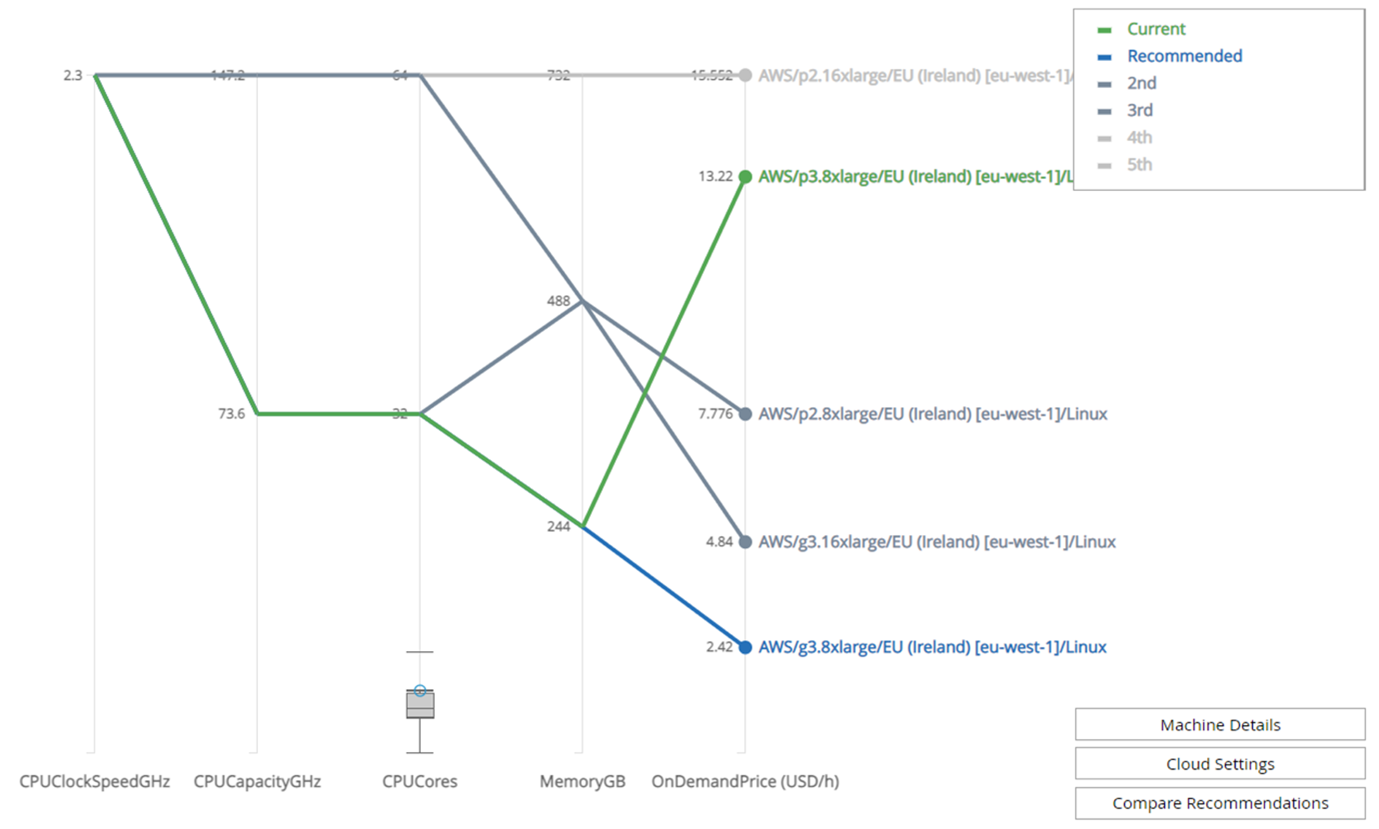

Let’s look at an example – this is a recommendation from ITRS Capacity Planner for a machine with very little activity.

Working from left to right, we see each of the metrics considered when making a recommendation. You can see clock speed, capacity, core utilisation, memory and on-demand price. As is quite common, we don’t have a memory utilisation figure. Memory utilisation can make a significant impact to right-sizing and we’ll talk about that further ahead.

The ‘shape’ of each machine is drawn as a line, the green line being the current configuration, showing the ‘shape’ of the p3.8xlarge. The other lines are recommendations suitable for the equivalent ‘shape’ with the blue line being the cheapest recommendation.

Capacity Planner will provide up to five alternatives for the machine. The closer to the bottom the point on the rightmost axis is, the cheaper that machine is to run.

You can see that this machine currently has 32 cores and 244GB of RAM. It’s the p3.8xlarge instance type and costs just over $13 per hour to run. At its peak, it’s using less than 30% of that available compute capacity. 95% of the time, CPU is less than 18%. You can tell this from the CPU Cores axis ‘boxplot.’ The box plot shows the variance of the metric over the period of time being used to model the demand, which will typically be around three months.

The upper line is the peak during that time, while the next line with the blue circle around it is the 95th percentile (or %ile). This is the value at which the CPU spends approximately 95% of its time at or below. To flip that around, 5% of its time, CPU on this machine is at that level or higher, reaching the peak indicated.

The grey box area is the where the metric spends most of its time, bouncing between the upper and lower quartiles. The box plot does a great job at summarising the activity of a metric over a long period of time in a single visualisation.

Looking at machine details, you can see when the peak occurs and whether you need to consider that in your right-sizing calculations. In this case, it would be best to decide not to. The 95%ile will be sufficient.

The recommendation from Capacity Planner is to use the x1e.2xlarge. If you use that, you will make significant savings of $11 per hour on this long running machine.

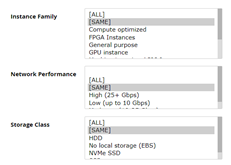

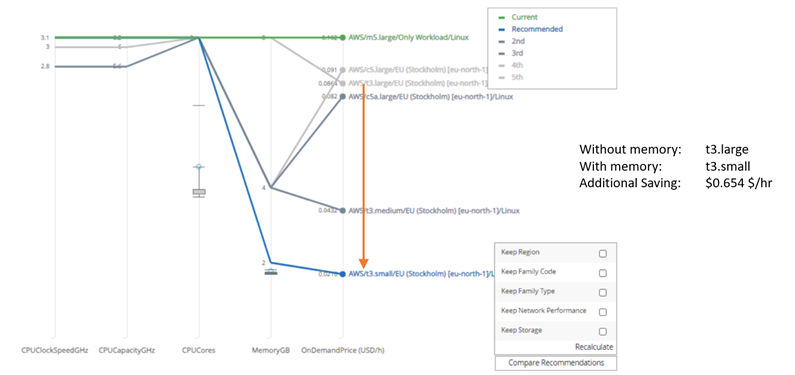

However, you may speak to the application architecture team and be informed that you can’t use that instance type – this application needs an instance from the same family, and you can’t compromise on storage or network either.

Capacity Planner allows you to take that into consideration, and you can do so by re-running the recommendation on demand but ensuring that the family, network and storage configuration will remain the same.

You will then be presented with a new recommendation.

Now, you get a much clearer view on the difference in ‘shape’ between the current p3.8xlarge and the g3.8xlarge.

This demonstrates that there are still significant savings to be had while keeping the same instance family, storge and network configuration. Based on the utilisation, the g3.8xlarge would be sufficient and still yield significant savings.

In the above recommendations, we’ve had to assume that the allocated memory capacity could not be changed. By default, most cloud providers don’t provide insight into memory utilisation without extra costs involved. But having insight into memory can lead to significantly more savings.

At ITRS, we would recommend building a history of at least one month of memory data for targeted machines, to allow for more effective right-sizing. You can see below the impact that memory can have.

In this example, the m5.large machine was configured with 8GB of memory. From a pure CPU perspective, it was making good use of the allocated capacity. However, it could be right-sized to a burstable t3.large instance and make further savings while not incurring excess credit utilisation.

Now that we can see memory utilisation, it’s possible to right-size even more aggressively. The memory utilisation demand profile indicates that 2GB would be sufficient. This allows for an even cheaper recommendation – from the t3.large to the t3.small, which adds up to a further $0.654 per hour saving.

Make good use of what you’ve paid for

Memory is particularly important if you want to make good use of your previously bought reservations.

Reservations or Reserved Instances (RIs), while being relatively inflexible, still offer the most significant savings – but it’s easy to lose track of their use and find your bill creeping upwards again.

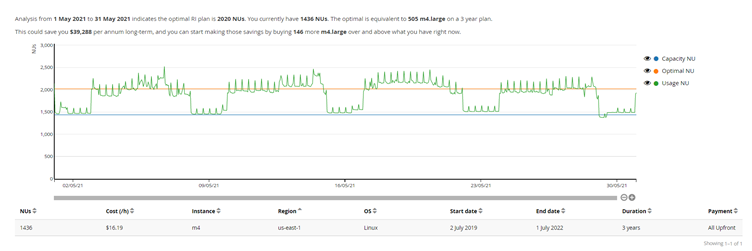

In the following example, the use of m4.large instances across the entire estate is larger than anticipated when the reservations were purchased.

The lower blue line shows the current investment in RIs for the m4 family running Linux machines in the us-east-1 region. The orange line shows the optimal investment for maximum savings. You could save a further $39,288 per year by buying 146 m4.large RIs. But is there an alternative to spending more money? Reservations can be re-used by instances in the same family, provided they share the same platform and region – so, should someone reserve a number of m4.large instances, m4.xlarge and m4.2xlarge instances can be covered by those reservations.

The larger the instance type, the more of a collection of reservations it will use. In the above example, the system is recommending that you buy 146 more m4.large. You can see from the profile that the optimal (orange line) is significantly higher than your current investment.

However, there’s an alternative to this – you could avoid buying any more reservations if you right-size and right-buy the instances you currently have. Let’s have a look.

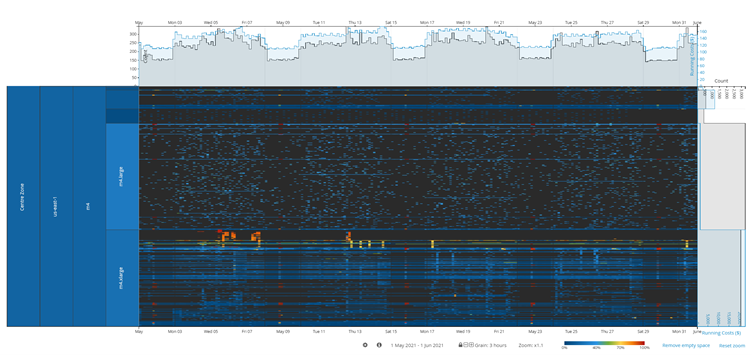

Here’s the ‘timeburst’ of all m4 family instances running in the us-east-1 region, across all accounts.

If you remember the timeburst from the first part of this series, you’ll see the heatmap clearly demonstrates that most instances have very low levels of utilisation. You can also see many long running m4.xlarge instances.

These long running m4.xlarge instances and in some cases 16xlarge instances will be making use of that reservation investment and perhaps they shouldn’t.

Right-sizing within an instance family can significantly reduce the utilisation of those reservations and free up ‘capacity’ for more instances. The smaller an instance is within the same family, the less capacity it will use within that RI. In this case, I could right-size almost all of my instances larger than xlarge, and remove the need to make any further purchases.

To do this accurately, we would highly recommend insight into memory utilisation. Instance sizes double in CPU and memory capacity as they double in price.

Right-sizing within the same family type requires insight into memory demand to determine if memory capacity can be safely reduced and the machine made smaller, while remaining in the same family.

Right-sizing can have a significant impact on your bill, particularly if you do so before committing to hourly rates for discounts. It must also be a continuous activity to ensure good practice and optimum configurations are always used with your workloads.

With deep analytical insight, right-sizing can help you make better use of your investment and run your cloud more cost effectively.